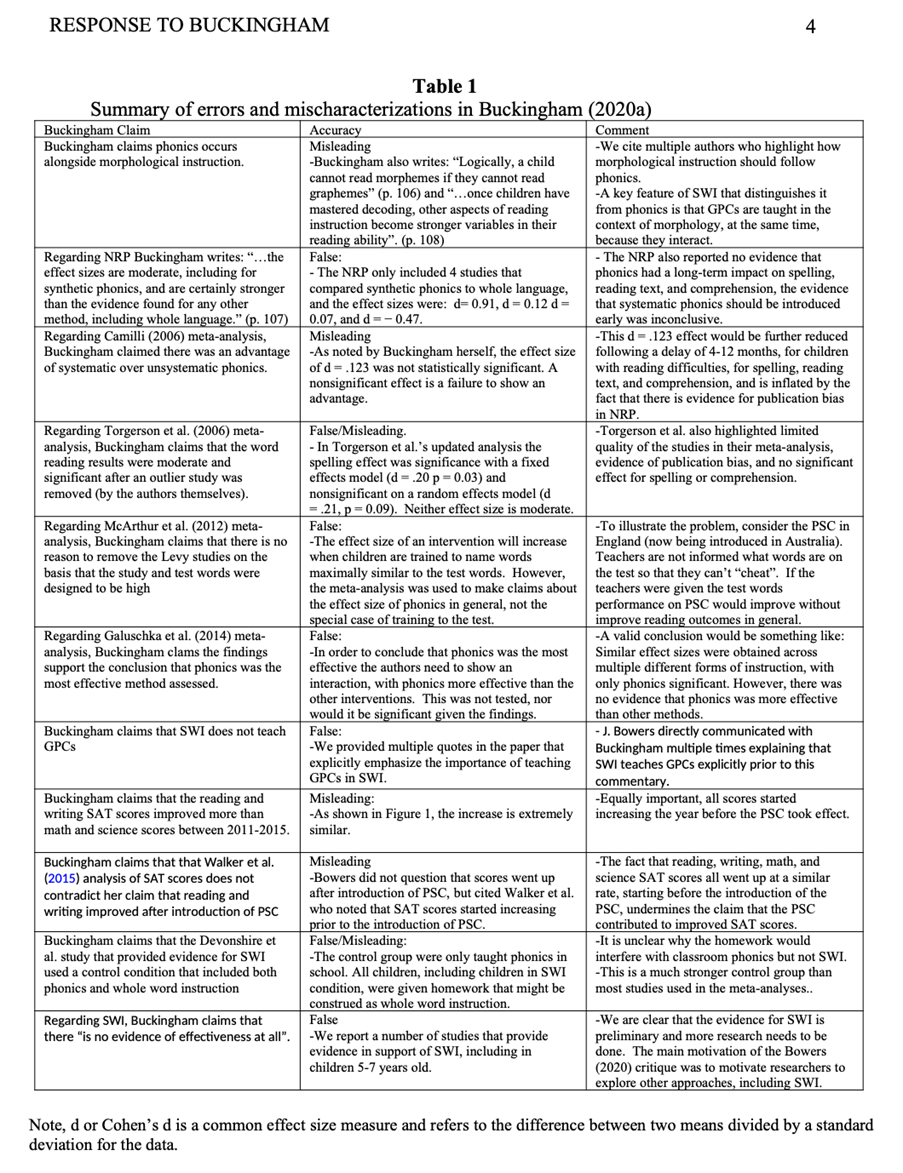

There are many things wrong with the science and research culture in the “Science of Reading” community where the evidence for phonics is claimed to be so compelling. Here I want to focus on a recent critique of Bowers (2020) by Brooks (2023) just published in the journal “Review of Education”. Like a previous critique of Bowers (2020) by Buckingham (2020) published in the journal “The Educational and Developmental Psychologist”, I was not asked to review it, and in both cases, the articles are chock-full of errors, irrelevancies, and mischaracterizations. In the case of Buckingham (2020) I wrote a response that included a table outlining the most important mistakes (see Table 1 at end of this post). But the journal rejected my response and even refused to retract the most significant and most egregious errors. For more details see: https://jeffbowers.blogs.bristol.ac.uk/blog/buckingham-2020/. You can find the rejected article here: https://doi.org/10.31234/osf.io/f5qyu. The Brooks critique of Bowers (2020) is no better. Indeed, every point is either wrong, irrelevant, or misleading (apart his catch of a typo on a date), and it gets embarrassing towards the end when discussing Sherman (2007). Let’s see if the journal will publish a response.

Bowers (2020) reviewed the 12 existing meta-analyses on phonics and reading outcomes in England since legally mandating phonics in state schools in 2007. The Brooks (2023) critique focuses on four of these meta-analyses, and in addition, criticized a more recent article by Wyse nor Bradbury (2022). Again, neither Wyse nor Bradbury were asked to review the Brooks article prior to publication. I’ll let Wyse et al. respond to points directed at them and focus on my own work, going sequentially through Brooks’ points.

First, he criticizes my analysis of Galuschka et al. (2014) who found similar effect sizes for phonics (g′ = 0.32), phonemic awareness instruction (g′ = 0.28), reading fluency training (g′ = 0.30), auditory training (g′ = 0.39), and color overlays (g′ = 0.32) and nevertheless concluded that phonics was the “most” effective method because only phonics had a significant effect (due to the fact that the phonics condition included many more studies). I noted that the conclusion that phonics is the most effective requires a significant interaction – the effect of phonics needs to be larger than the effect of the other methods – to support this conclusion. The interaction was not reported (and would not be significant given the similar effect sizes).

In response, Brooks writes that Bowers “chides Galuschka et al. for not having carried out an analysis of the interaction between the various approaches—a mistaken demand as that term refers to two independent variables, whereas here there would be only one (type of approach).” This is simply incorrect. It is easy to test for an interaction, by assessing whether phonics is more effective than the other interventions. Contrary to Brooks there are multiple different independent variables in this study (the different interventions). But if you want to say that the different interventions are just different levels of one independent variable (“type of approach”), then you still need to ask if the different levels of instruction interact, with better outcomes for phonics. Buckingham (2020) also challenged me on this most basic point of statistics. More generally, it just does not make sense to take a study that reports similar effect sizes for a wide range of interventions as evidence that phonics is best.

Next, Greg criticizes my analysis of Han (2009). He makes his one correct point here – I did cite this dissertation as being published in 2010 rather than 2009. Below is the sum total of what I wrote in Bowers (2020), where I combined my critique of this work with another meta-analysis:

Han (2010) and Adesope, Lavin, Tompson, and Ungerleider (2011). These authors reported meta-analyses that assessed the efficacy of phonics for non-native English speakers learning English. Han (2010) included five different intervention conditions and dependent measures and reported the overall effect sizes as 0.33 for phonics, 0.41 for phonemic awareness, 0.38 for fluency, 0.34 for vocabulary, and 0.32 for comprehension. In the case of Adesope et al. (2011), the authors found that systematic phonics instruction improved performance (g = + 0.40), but they also found that an intervention they called collaborative reading produced a larger effect (g = + 0.48) as did a condition called writing (structured and diary) that produced an effect of g = + 0.54. Accordingly, ignoring all other potential issues discussed above, these studies do not provide any evidence that phonics is the most effective strategy for reading acquisition.

Brooks’ is critical of my analysis of the Han because he claims that there is a flaw in the Han study, writing “[Han] gives a list of 11 ‘Instructional activities’ which are classified as phonics—but only one, or at most two, deserve that label.” But this is simply irrelevant. For the sake of argument, let’s accept Han’s meta-analysis is flawed. Then fine, my conclusion stands that this study provides no evidence for phonics. Indeed, it strengthens my conclusion – if the selection of studies to include in the meta-analysis was flawed, not only is the .33 effect for phonics not larger than the alternative methods, but it is also a bogus finding.

Next, he criticizes my analysis of Sherman. This is the sum total I wrote on this meta-analysis:

Sherman (2007). Sherman compared phonemic awareness and phonics instruction with students in grades 5 through 12 who read significantly below grade-level expectations. Neither method was found to provide a significant benefit.

Greg claims that Sherman did indeed obtain a significant benefit of phonics, writing:

Bowers (2020: 695) says that Sherman (2007) found no overall effect of phonics. This is inaccurate. The top line of data in Sherman’s table 20 (p. 69) shows an ES of 0.33 for the impact of phonics on literacy overall. The confidence interval for the ES is given as 0.13 Lower, 0.52 Upper; since this does not cross zero, the ES must be significant at least at p<0.05, even though Sherman does not give a probability value or discuss this result.

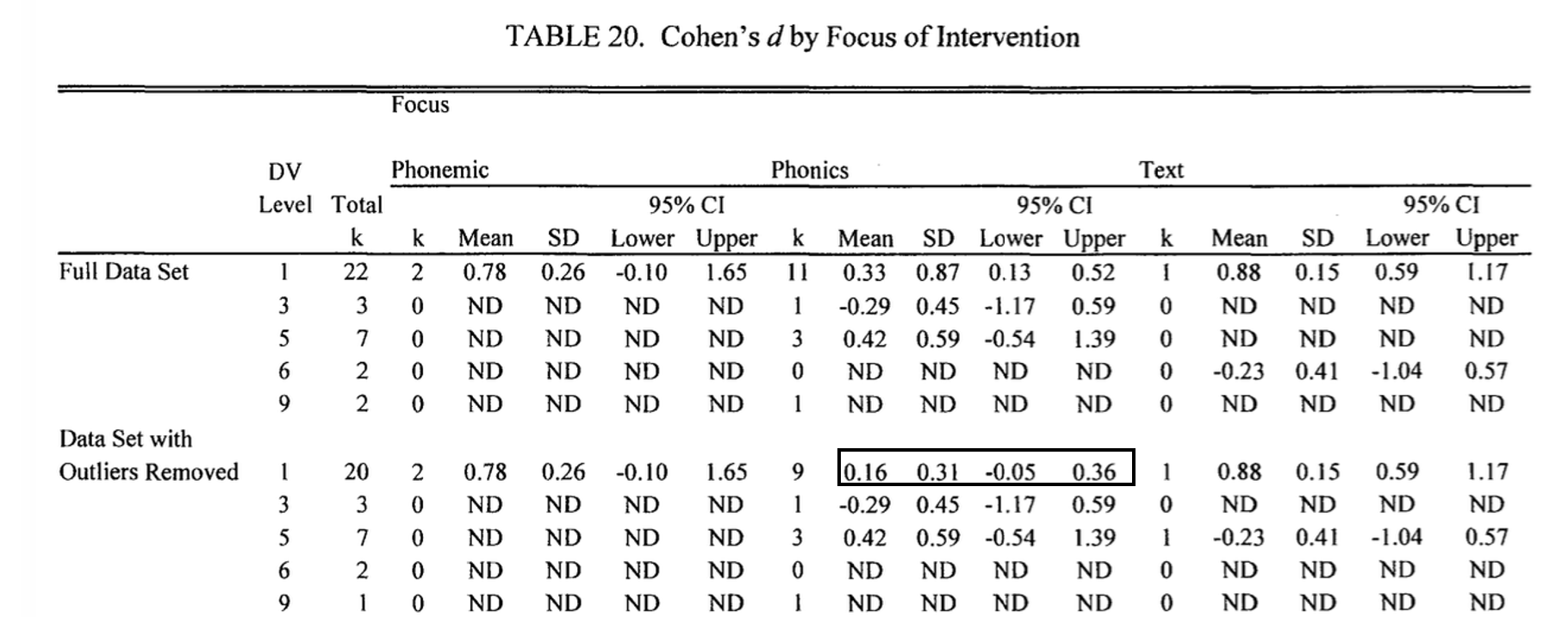

There are a few problems. First, here is the table that Greg is referring to. He is indeed correct that the confidence intervals do not overlap with zero in the analysis carried out on the full dataset, but the analysis that excluded outliers does:

Now, you might wonder whether outlier studies should be excluded, but here is a description of the outlier studies taken from the dissertation:

Including a study with an effect size (cohen’s d) of 7.69 is absurd. Indeed, this is effect size is so large I expect it is a mistake in the Sherman PhD. thesis. But it is not worth looking into this when you read the following from the thesis:

Because of the small number of studies and the variability of the population studied, the alpha level was relaxed to 0.25 to explore statistical significance of main effects or interaction effects at this level. The impact of group size and reading level on effect size was significant in many of the analyses at a 0.25 alpha level.

In other words, because Sherman (2007) was not obtaining significant effects at the .05 level, she decided to work with a .25 level of significance! So, even if we consider the full dataset that includes a study with Cohen d of 7.69, the analysis only shows that it is significant at the .25 level rather than the .05 level as Brooks claims.

Finally, Greg criticizes my analysis of Camilli et al. (2003), writing:

When I pointed out the fragility of Camilli et al.’s (2003) analysis, Bowers (personal communication, 9 March 2023) replied: ‘My critique does not hinge on the Camilli et al. findings (there is little evidence for phonics even if you ignore his [sic] point).’ Despite what he says, Bowers’ argument does in fact make considerable use of ‘the Camilli et al. findings’:

This leaves the impression that I’m conceding his point regarding Camilli et al. (2003). But I am not. He has selectively quoted me in a misleading way. Here is what I wrote in that email:

“My critique does not hinge on the Camilli et al. findings (there is little evidence for phonics even if you ignore his point), but I don’t understand your criticism of the study. There are essentially no forms of instruction used in school that use NO phonics, so studies that completely ignore phonics are not appropriate to include in a control condition if you want to claim that systematic phonics is needed to improve existing classroom instruction.”

That is it! This covers all the points Brooks raised to challenge my critique of the evidence for phonics. It is an impressive repeat of Buckingham (2020): Every substantive point is wrong, irrelevant, or misleading. This is why the journal “Review of Education” should have asked me to review Brooks’ manuscript. Indeed, that should be standard policy – if a journal is considering publishing a critique of an article, the authors of the published work should have a chance to review the submitted manuscript (and an opportunity to respond if the work is published). Now we have Greg Ashman, Nate Joseph, Timothy Shanahan, Pamela Snow, Keven Wheldall, Dylan Wiliam, and others retweeting this flawed article that should never have been published in the first place. Let’s see if any of these authors will retweet this response.

I should note that a much more reasonable response to Bowers (2020) was published by Fletcher et al. (2021) in journal “Educational Psychology Review”. I was asked to review the submission where I identified multiple mistakes that were fixed prior to publication, and I was invited to submit a response (Bowers, 2021) that was in turn reviewed by Fletcher. I think the criticisms by Fletcher et al. (2021) were misguided, but at least it was a constructive exchange and I think some common confusions were clarified. A reader who read the full exchange will have learnt something – but that is not the case here, other than perhaps learn how low the standards are when making pro-phonics claims.

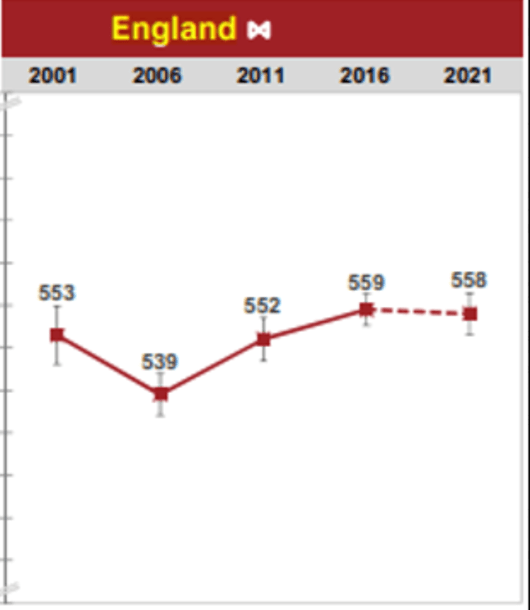

And the unjustified scientific claims used to support the efficacy of phonics just does no stop. For example, the recently released Progress in International Reading Literacy Study (PIRLS) showed that England ranked 4th (of 61 countries). An impressive performance indeed. The problem is that many researchers are attributing this outcome to phonics. For example, Kathy Rastle tweeted:

Here are the PIRLS results that led her to this conclusion. See a problem?

In a series of tweets to Rastle I pointed out two additional problems with attributing the high PIRLS ranking to phonics. First, Singapore, Ireland, and Norther Ireland have consistently outperformed England in English despite not requiring phonics (nor the phonics screening check). Part of the reason why England went up in the most recent rankings is that Ireland and North Ireland were excluded from the comparison. (They again scored better but were excluded because a delay in assessing children on PIRLS – due to COVID – meant that children were slightly older.) Second, England scored better than Italy and Spain (and many other countries) that have writing systems with consistent grapheme-phoneme correspondences, and where children would score near 100% (at a younger age) if they were presented with a phonics screening check. However effective mandated phonics has been in improving the naming of English regular words (and nonwords), English children are not as good as Italian and Spanish children at naming words (and nonwords) in their languages. Accordingly, the higher English scores in PIRLS (that measures reading comprehension) must reflect something other than phonics. That is worth exploring. But no matter, many researchers are attributing the good results to phonics. I received no response.

References:

Adesope, O. O., Lavin, T., Thompson, T., & Ungerleider, C. (2011). Pedagogical strategies for teaching literacy to ESL immigrant students: a meta-analysis. British Journal of Educational Psychology, 81(Pt 4), 629–653.

Bowers, J.S. (2020) Reconsidering the Evidence that Systematic Phonics is more Effective than Alternative Methods of Reading Instruction. Educational Psychology Review, 32, 681-705.

Bowers, J.S. (2021). Yes children need to learn their GPCs but there really is little or no evidence that systematic or explicit phonics is effective: A response to Fletcher, Savage, and Sharon (2020). Educational Psychology Review. doi.org/10.1007/s10648-021-09602-z

Bowers, J.S., & Bowers, P.N. (2021). The science of reading provides little or no support for the widespread claim that systematic phonics should be part of initial reading instruction: A response to Buckingham. doi.org/10.31234/osf.io/f5qyu

Brooks, G. (2023). Disputing recent attempts to reject the evidence in favour of systematic phonics instruction. Review of Education, 11(2), e3408.

Buckingham, J. (2020). Systematic phonics instruction belongs in evidence-based reading programs: A response to Bowers. The Educational and Developmental Psychologist, 1-9.

Camilli, G., Vargan, S., & Yurecko, M. (2003). Teaching children to read: the fragile link between science and federal education policy. Education Policy Analysis Archives, 11(15), 1–51.

Fletcher, J. M., Savage, R., & Vaughn, S. (2021). A commentary on Bowers (2020) and the role of phonics instruction in reading. Educational Psychology Review, 33, 1249-1274.

Galuschka, K., Ise, E., Krick, K., & Schulte-Körne, G. (2014). Effectiveness of treatment approaches for children and adolescents with reading disabilities: a meta-analysis of randomized controlled trials. PLoS One, 9(2), e89900. https://doi.org/10.1371/journal.pone.0089900.

Han, I. (2009). Evidence-based reading instruction for English language learners in preschool through sixth grades: a meta-analysis of group design studies. Retrieved from the University of Minnesota Digital Conservancy, http://hdl.handle.net/11299/54192.

Sherman, K. H. (2007). A Meta-analysis of interventions for phonemic awareness and phonics instruction for delayed older readers. University of Oregon, ProQuest Dissertations Publishing, 2007, 3285626.

Wyse, D., & Bradbury, A. (2022). Reading wars or reading reconciliation? A critical examination of robust research evidence, curriculum policy and teachers’ practices for teaching phonics and reading, Review of. Education, 10(1), 1 53. https://doi.org/10.1002/rev3.3314

****Here is the table from Bowers and Bowers (2021) responding to Buckingham (2020). Buckingham did not respond to any of points, nor has anyone else that I am aware of. But Buckingham did block me on twitter and suggested she would sue me for comments in the following blogpost that details the sad episode. https://jeffbowers.blogs.bristol.ac.uk/blog/buckingham-2020/

Hi Jeff,

Have I got this right? You would agree with this statement by Reid Lyon:

“All good readers are good decoders. Decoding should be taught until children can accurately and independently read new words. Decoding depends on phonemic awareness: a child’s ability to identify individual speech sounds. Decoding is the on-ramp for word recognition.”

https://readinguniverse.org/article/explore-teaching-topics/big-picture/ten-maxims-what-weve-learned-so-far-about-how-children-learn-to-read

But you don’t think there’s convincing evidence that phonics is the best way to teach decoding in order to achieve word recognition?

Harriett

Hi Harriett, I agree that the ability to decode is important. But systematic phonics is not the only way to teach decoding, and I’m claiming there is little or no evidence that systematic phonics is more effective than alternative common methods used in schools. Brooks’ article only highlights my point — his response does not stand up to scrutiny.

Well, Jeff, you can imagine how frustrating it is for me (as I’ve expressed before) to read the back and forth on this issue without having the statistical smarts to do the analysis myself. You say that “systematic phonics is not the only way to teach decoding and . . . there is little or no evidence that systematic phonics is more effective.”

Over the past two months, I’ve been teaching three times a week in a first grade class that has a teacher who is a recent college graduate with no teacher training, and as I introduce the phoneme-grapheme connections by dictating ‘word chains’ (see “Focusing Attention on Decoding for Children With Poor Reading Skills: Design and Preliminary Tests of the Word Building Intervention” by McCandliss, Beck and Perfetti and Isabel Beck’s work on ‘minimal contrasts’ in her book Making Sense of Phonics), and then practice reading these patterns in the decodable books–progressing from the basic code (systematically) to the advanced code by the end of the year–I am grateful to be able to proceed in this way so that I can make sure the code is ‘taught’ and not ‘caught’ incidentally (which is especially problematic for struggling students).

But I also recognize the importance of exposing students to more spelling patterns than what I’m teaching so that Share’s Self-Teaching Hypothesis and Seidenberg’s emphasis on implicit learning can also come into play. I don’t call what I do Structured Literacy–I call it I.N.C.L.U.S.I.V.E. Literacy: Integrating the Necessary Components of Literacy Under a Systematic and Intentional–yet VARIABLE–Evidence-based approach.

My follow-up question: I’m assuming that as a proponent of SWI, you do not think that phonics, systematic or otherwise, is the best way to teach decoding. Is that still your position?

The ordering of the Brooks/Bowers exchange is a bit confusing. The start of the exchange is Brooks’ second point (where I respond), and then continues on at his second post.

Jeff

With regard to Galuschka et al., I definitely did not misdescribe the situation to my colleague – I sent him your article. So I repeat: I doubt you can claim greater statistical expertise than an FRSS, and it therefore seems to me that he is right on this and you are wrong.

With regard to Galuschka et al., Han and Sherman, you seem to be interpreting me as saying that they provide evidence that phonics is more effective than ‘other common methods’ – I am not; at no point have I argued or suggested that phonics is more or less effective than (say) coloured overlays. The only comparisons I have dealt with are between systematic phonics and unsystematic or no phonics. By conflating the two sets of comparisons you come close to misrepresenting my position.

I criticised your use of these three studies because it seemed to me that you had misinterpreted them in various ways. And so far from agreeing with your latest statements, I find your logic faulty – these studies may not provide any evidence that phonics is more effective, but where their figures are unreliable they also provide no evidence that phonics is equally or less effective – they provide no relevant evidence at all.

You say: ‘Whether Camilli et al.’s classification of studies in the NPR into different degrees of systematic phonics is reliable or not is an interesting question, but regardless, it undermines the use of the NPR to make claims that systematic phonics is better than whole language ‘ – no it doesn’t. I think I have sufficiently demonstrated that their classification is unreliable, and therefore cannot be used to undermine the NRP analysis. I refer you also to the critique of Camilli et al. by Stuebing et al. (2008), summarised in my 2022 article and showing that Camilli et al.’s analysis is not the only way of investigating the NRP data.

The NRP did analyse the few studies (then) that had done follow-up testing – you will find a summary of their findings and a reference to the original source in Ehri et al. (2001) in my 2022 article.

Of course the NRP data showed less effect for struggling readers – and again in my 2022 article you will find I specifically looked into that.

In my RoE article and in this thread I have not dealt with ‘the many other meta-analyses [you] reviewed … [or] the reading outcomes in England since systematic phonics was introduced in 2007’ because they were not relevant here. Yet again, these are dealt with in my 2022 article. And where you run on from that point to other people’s bogus claims about the effect of the introduction of systematic phonics, you are close to accusing me of holding views that I in fact oppose – a possibly misleading conflation.

At this point I am minded to leave this alone, remembering what Attlee once said to a member of his cabinet: ‘A period of silence on your part would be greatly appreciated.’

Dear Greg, I hate to disagree with an expert statistician with an FRSS, but there is no statistic that can show that a .32 effect size for phonics is larger than a .32 effect size for colour overlays. Therefore, Galuschka et al.’s (2014) meta-analysis provides no evidence that phonics is more effective than colour overlays (the same applies to a range of methods that all obtained similar effect sizes to phonics – in some cases the effect sizes for alternative methods were numerically larger). Perhaps the FRSS can post himself and explain what I’ve got wrong here. Note: This is not an endorsement of colour overlays.

As you yourself (correctly) wrote in your article: “Bowers (‘Reconsidering the evidence that systematic phonics is more effective than alternative methods of reading instruction’, Educational Psychology Review, 32, 2020) attempts to show that there is little or no research evidence that systematic phonics instruction is more effective than other commonly used methods.” We seem to agree that the Han (2009) and the Sherman (2007) meta-analysis provide no evidence that phonics is more effective. Perhaps I could have criticized these studies more (I barely mentioned them as they are unpublished PhD theses and consider different populations that I was focusing on – second language learners and children from grades 5-12, respectively), but my point stands, namely, they provide no evidence that phonics is more effective.

In responses to my statement “the NPR did not even assess the long-term effects of spelling, reading texts, or reading comprehension” you write: “The NRP did analyse the few studies (then) that had done follow-up testing – you will find a summary of their findings and a reference to the original source in Ehri et al. (2001) in my 2022 article.

But I am aware that the NPR did assess the long-term impact of phonics. Here is what I wrote in Bowers (2020). Note the last sentence:

“In sum, rather than the strong conclusions emphasized the executive summary of the NRP (2000) and the abstract of Ehri et al. (2001), the appropriate conclusion from this meta-analysis should be something like this:

Systematic phonics provides a small short-term benefit to spelling, reading text, and comprehension, with no evidence that these effects persist following a delay of 4– 12 months (the effects were not reported nor assessed). It is unclear whether there is an advantage of introducing phonics early, and there are no short- or long-term benefit for majority of struggling readers above grade 1 (children with below average intelligence). Systematic phonics did provide a moderate short-term benefit to regular word and pseudoword naming, with overall benefits significant but reduced by a third following 4–12 months”.

And this conclusion is only valid if you ignore the many flaws of the NRP. Once you take into account the additional flaws of the NRP (publication bias, wrong control condition, poor design of most studies, etc.) the conclusions you can draw are much weaker. But in any case, the NRP is so out of date that it should be ignored now in favour of more recent meta-analyses (that also show little or no evidence that phonics is more effective than common alternative methods, as reviewed by Bowers, 2020, 2021). I think we are no going in circles now, so will keep silent now as you suggest (unless your FRSS friend wants to chime in or someone else).

Jeff

Yes, Review of Education should have shown you my article for comment before publishing it, and yes, otherwise they should give you the right to reply, but none of that makes your arguments valid.

Galuschka et al. (2014): On whether calculating an interaction term was the correct approach I consulted a friend who is an expert statistician and a Fellow of the Royal Statistical Society. He confirmed that this was the wrong approach. I doubt you can claim greater expertise. However, despite what you say, Galuschka et al. did in fact report such a test – see if you can spot it.

Han (2009): This was included in Torgerson et al. (2019) – where, incidentally, we also got the date wrong – but on checking it while writing Brooks (2022) I found the activities classified as phonics mostly aren’t, and therefore dropped it. That the activities called phonics are mostly not is not ‘simply irrelevant’ as you claim – it means that Han’s effect size for phonics is unreliable, and should therefore neither be cited, nor used in comparing the effectiveness of various methods.

Sherman (2007): Yes, I failed to notice the massive outlier which fatally undermines the potentially significant effect size, and should have qualified my statement accordingly – as you should have too, perhaps.

Camilli et al. (2003): The three matters listed above are all minor, but this one is serious, especially since you fail to engage with the main thrust of my argument here. Let’s start by reminding ourselves of the claim in your 2020 article that systematic phonics v unsystematic or no phonics is the wrong comparison, and that the only valid comparison is between systematic and unsystematic phonics. When, in my email to you, I pointed out the fragility of Camilli et al.’s analysis attempting to separate unsystematic and no phonics, your first line of defence in your 9 March 2023 email was (I quote) “My critique does not hinge on the Camilli et al. findings…” In my 2023 article I list the numerous occasions in your 2020 article when you cite Camilli et al., and conclude that it was indeed a mainstay of your argument.

In your blog you allege that I have ‘selectively quoted [you] in a misleading way’, apparently by quoting only the first clause and the parenthesis of the paragraph in your 9 March email in which they appear. I do not accept that. I will answer it by quoting that paragraph in full, as you have in the blog, and analysing it:

“My critique does not hinge on the Camilli et al. findings (there is little evidence for phonics even if you ignore his point), but I don’t understand your criticism of the study. There are essentially no forms of instruction used in school that use NO phonics, so studies that completely ignore phonics are not appropriate to include in a control condition if you want to claim that systematic phonics is needed to improve existing classroom instruction.”

This paragraph appears to me to make four points:

1) That your critique [of the systematic v unsystematic or no phonics approach] does not hinge on the Camilli et al. findings

2) That there is little evidence for phonics even if you ignore Camilli et al.’s point

3) That there are essentially no forms of instruction used in school that use NO phonics

4) Therefore, that studies that completely ignore phonics are not appropriate to include in a control condition if you want to claim that systematic phonics is needed to improve existing classroom instruction.

1) I have dealt with this in my 2023 article and above, and rest this part of my case.

2) I refer you to the accumulated evidence in Torgerson et al. (2019) and Brooks (2022), and to all the systematic reviews and meta-analyses they are based on. I cannot see how you can seriously maintain that ‘there is little evidence for phonics’ when faced with all this.

3) I dispute the notion that ‘there are essentially no forms of instruction used in school that use NO phonics.’ This may be true in England nowadays (because of government mandates), but I’m quite sure it wasn’t in past decades when the studies underlying the various meta-analyses were undertaken. When Ken Goodman, Frank Smith and Stephen Krashen were most influential, there seem to have been many classrooms in North America where whole language reigned and phonics was entirely avoided. And Camilli et al., while trawling through the studies analysed by the National Reading Panel (2000)/Ehri et al. (2001), appear to have concluded that some of those studies did indeed include a ‘no phonics’ condition.

In this country Liz Waterland’s ‘apprenticeship’ approach encouraged many teachers to do without phonics – see Beard and Oakhill’s Reading by Apprenticeship? (NFER, 1994) for a critique. And if you check the tables of statistics in Cato et al. The teaching of initial literacy: How do teachers do it? (NFER, 1992), you’ll find survey evidence that there were some teachers in this country (very few, but they existed) who said they did not use phonics at all.

4) The crux of my argument against you, in both my 2022 encyclopedia article and my 2023 journal article, has been that Camilli et al.’s attempt to analyse unsystematic and no phonics separately was flawed. I note that your blog does not even mention this, so I will repeat: Camilli et al. merged the studies they coded ‘Not given/No information’ with those they coded ‘No phonics’ – see the paragraph immediately following their Table 2, where they also give reasons for doing this which I find both obscure and unconvincing. I stand by my conclusion that their critique fails to undermine the case for using ‘systematic v unsystematic or no phonics’ as the analytic frame in assessing the empirical evidence on phonics.

And I further stand by my conclusions that your case against phonics is unproven, and therefore that systematic phonics is a necessary (but not sufficient) component of effective initial literacy instruction.

Thanks Greg for responding. Let me first briefly respond to your points and then look at another recent example of the “science of reading” being misused used to support phonics with prominent researchers (again) distributing the false claims.

With regards to the Galuschka et al. (2014) study, I’m afraid your expert statistician and a Fellow of the Royal Statistical Society has got it wrong, or you have misdescribed the situation to him or her. In this study, phonics obtained an effect size of g′ = 0.32 and a host of other methods obtained similar effect sizes. Let’s just consider color overlays that obtained the same exact effect size of g′ = 0.32. There is no statistical test that will show an effect size of .32 is larger than an effect size of .32. Researchers need to stop citing this study as providing evidence that phonics is more effective than alternative methods. And although irrelevant to the current point, as I note in Bowers (2020), Galuschka et al. *themselves* noted that there was evidence for publication bias in the phonics studies, and when they correct for this, the effect size was g′ = 0.198.

Regarding the Han (2009) we seem to agree: it provides no evidence that phonics is more effective than alternative methods.

Regarding Sherman (2007) we also seem to agree it provides no evidence for phonics being more effective. Although if I was going to list the flaws of the meta-analysis, I would put the unorthodox choice of a .25 significance level at the top of the list.

So thus far we have three meta-analyses you selected to review that do not show any evidence that phonics is more effective than alternative methods. Then we get to Camilli et al. meta-analysis. They make the important point that the NPR compared systematic phonics to a control condition that includes studies with *no* phonics. This means that that the NPR did not even test whether systematic phonics is better than whole language that *does* include some “unsystematic” phonics. This is from the NPR (2020):

Whole language teachers typically provide some instruction in phonics, usually as part of invented spelling activities or through the use of graphophonemic prompts during reading (Routman, 1996). However, their approach is to teach it unsystematically and incidentally in context as the need arises. The whole language approach regards letter-sound correspondences, referred to as graphophonemics, as just one of three cueing systems (the others being semantic/meaning cues and syntactic/language cues) that are used to read and write text. Whole language teachers believe that phonics instruction should be integrated into meaningful reading, writing, listening, and speaking activities and taught incidentally when they perceive it is needed. As children attempt to use written language for communication, they will discover naturally that they need to know about letter-sound relationships and how letters function in reading and writing. When this need becomes evident, teachers are expected to respond by providing the instruction.

And in the UK, prior to the legal mandate of systematic phonics in England, we have the following from Her Majesty’s Inspectorate (1990): “…phonic skills were taught almost universally and usually to beneficial effect” (p. 2) and that “Successful teachers of reading and the majority of schools used a mix of methods each reinforcing the other as the children’s reading developed” (p. 15).

Whether Camilli et al.’s classification of studies in the NPR into different degrees of systematic phonics is reliable or not is an interesting question, but regardless, it undermines the use of the NPR to make claims that systematic phonics is better than whole language. And the problems with the NPR do not stop there. For example, the NPR did not even assess the long-term effects of spelling, reading texts, or reading comprehension. The short-term effects were reduced for struggling readers. And when Torgerson et al. (2006) selectively looked at the randomized controlled studies in the NPR, they not only found evidence for publication bias in these studies, but they also found weaker and non-significant effects (while using the wrong control condition). And in any case, it is silly to be citing the NPR at this late date – all the studies are well over 20 years old now and more recent meta-analysis can capture these and more recent (and better designed) studies. So, again, this meta-analysis should not be used to support systematic phonics over alternative methods.

The Brooks (2023) review not only ignored the many other meta-analyses I reviewed (that also provide no evidence that systematic phonics is better than common alternative methods), but also the reading outcomes in England since systematic phonics was introduced in 2007. And this is where more mischaracterizations and bad science are being widely promoted. In the above blogpost I criticized Rastle for suggesting that phonics has led “pupils in England to become some of the world’s highest achieving readers”, and since then, we have Buckingham writing: “The UK Government made the Year 1 Phonics Screening Check mandatory in English schools in 2012. There is good evidence suggesting that the Phonics Screening Check played a significant role in England’s improved performance in the most recent PIRLS assessment”. See: https://multilit.com/wp-content/uploads/2023/08/NSRA-Submission-MultiLit-2023.pdf But look at the PIRLS results from my blogpost. Not only are the PIRLS results not improved in the most recent round (the score goes down by one point), but the biggest improvement in PIRLS predates the introduction of the PSC. You cannot make up this nonsense. But again, you have Pamela Snow, Kevin Wheldall and others liking or retweeting this nonsense to their 1000s of followers, just like they did with Kathy Rastle’s tweet.